The feature-rich web has a lot of advantages; the best web-apps are easy to learn, which is great since the learnability threshold is a huge problem with the wonderful world of Unix and worse-is-better that a lot of us are so enamored with. I remember when gratis webmail was first made widely available (with the launch of Rocketmail and Hotmail) and how it made email accessible to a lot of people who didn’t have access to it before: not only to library users, students and other people without ISPs, but also to people who couldn’t figure out how to use their ISP email (or to use it when they were away from home).

But there are also a couple of problems with the feature-rich web:

The web has gone through a couple of stages.

First, it was basic text and hyperlinks, no frills, no formatting.

Then, we were in the font-family, table, img map, Java applet, Shockwave, QuickTime, Flash, broken-puzzle-piece-of-the-week hell for a few years.

Third, CSS was invented and the heavens parted and we had clarity and it was degrading gracefully and could be tweaked and fixed on the user side. (Halfway through the third stage, “Web 2.0”, which means web pages talking to the server without having to reload the entire page, was introduced. I don’t have an issue with that, inherently, and it worked throughout the third stage and we didn’t run into problems until the fourth stage.)

Fourth, client-side DOM generation and completely JavaScript-reliant web pages were invented and we were back in the bad place.

Why was stages one and three so great and stages two and four so bad? Because one and three worked well with scraping, mashing, alternate usages, command line access, other interfaces, automation, labor-saving and comfort-giving devices. Awk & sed. They were also free-flowing and intrinsically device-independent.

The web, as presented by stage two and four, is arrogantly made with only one view in mind. It’s like interacting with the site through a thick sheet of glass. Yes, it’s “responsive”, which great if it really is (i.e. by being a stage one or three type web page), but often is just a cruel joke of “we are using JavaScript to check what we think your device is and then we are generating a view that we think suits it”. The “device independence” is limited and is only extrinsic.

API endpoints and Atom feeds. The ActivityPub “fediverse” is built on feed tech so it’s got to be doing at least something right, even though it has a lot of problems.

And HTMX or Hotwire is a way to get the best of both worlds. Traditionally accessible webpages with modern polish.

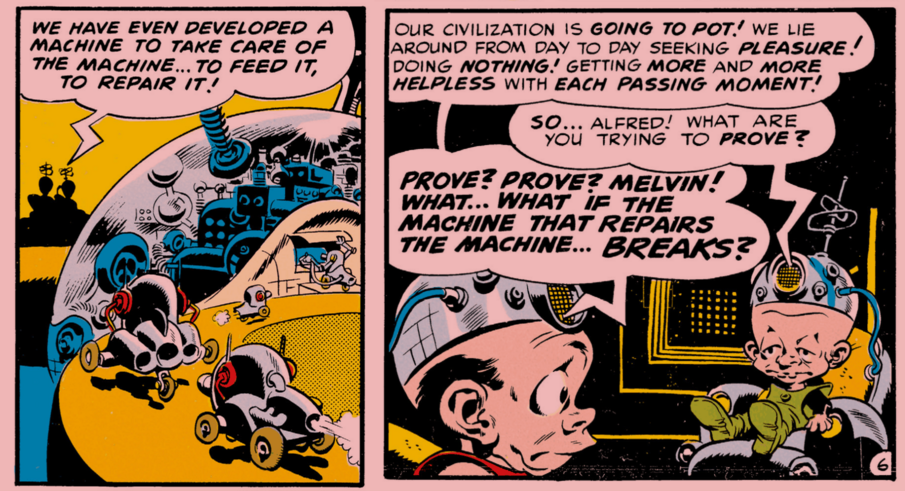

Obviously when your tech is a pile of junk upon junk that you can’t even see the bottom of, let alone understand, you risk running into security issues.

When there’s stuff buried deep in your Rube Goldberg machines that you don’t fully understand, things are gonna break, which is bad if hackers can exploit it to wreck you but it’s also bad on its own, if it just breaks and you can’t repair it. The tragedy of Muine comes to mind; it was the best music player GUI of all time but it ended when the Mono platform it was built on was pulled out from its feet.

Use, maintain, and teach full-stack (all the way down to the wires and metal), but feel free to stick to the subset of the stack that you’re actually using. Sometimes things fall by the wayside and that’s OK. I remember having to learn about “token-ring networks” in school and in hindsight that was wasted time; in the 25 years that has passed since then I have never used, seen, or even heard of them in real life now that we have Ethernet and wireless. (On the flipside, UDP was pretty rusted compared to TCP but has seen a resurgence with techs like Mosh and Wireguard.)

And then we do have the whole class of problems that is stemming from how for-profit entities use it. Trackers, exploiters, siloers, wasm miners, externality-abusers, path-dependency–seekers, monopolists, popup spammers, rootkits, pundits, modals, captchas, machine-generated text, SEO, bad typographers.

It’s a morass of badness out there.

Storm the palace.

The core of my problem with the web is this:

If I wanna access a web resource and I can’t just wget it and parse

the tags, if I instead need to boot up a huge eight megabyte Indigo

Ramses Colossus (like Safari (a.k.a. Epiphany), Firefox, or Chromium)

to do that, because otherwise there is no DOM because it needs to be

put together in JavaScript, that’s a problem. “But why not access the

web pages normally?”, these site devs ask. Because they are so bad and

so inaccessible and so gunked up with user-exploiting bad design

decisions.

The flipside is that the Unix afficionado’s solution (“use separate

apps, like an IRC client, email app, XMPP app, newsreader app”) is

inaccessible since not everyone knows their way around

./configure && make. So maybe the ultimate solution is both. Put

your room on IRC but provide a web interface to it. Put your contacts

on email but provide a web interface to that too.